Last October in The Guardian, Georgia Wright, Liat Olenick and Amy Westervelt wrote the column “The dirty dozen: meet America’s top climate villains.” The piece included heads of fossil fuel companies Chevron, Exxon and Koch Industries; two toadies of fossil fuel companies, climate policy obstructionist senators Mitch McConnell and Joe Manchin; financiers of fossil fuel companies (see Day 5 and Day 15 for breaking up with the big banks); and the enablers of misinformation and disinformation, Rupert Murdoch and Mark Zuckerberg.

While Murdoch sows doubt about climate change via his media empire, News Corp and Fox Corporation, Zuckerberg profits from climate misinformation and disinformation spreading like, well, wildfire on Facebook—and Instagram and WhatsApp.

Divisive social media posts increase engagement—and climate is divisive, thanks to the politicization of physics, chemistry and biology by fossil fuel interests. The more time users spend on Facebook, the more ads they see and the more money Facebook earns. In 2020, Facebook took in $9.6 million from 25 fossil fuel companies for ads that users viewed 431 million times, according to InfluenceMap, an independent think tank that analyzes how corporate lobbying affects climate change policy.

In addition to the proliferation of misinformation on social media—often shared innocently enough by users—are outright campaigns to purposefully confuse and divide the public.

Fossil fuel interests and their front groups are known to be using similar methods to manipulate public opinion, and the collective effect is a poisoning and weaponization of social media to advance the cause of denial, deflection, doomism, and delay. The basic strategy is as follows: Use professional trolls to amplify a particular meme on social media, and send in an army of bots to amplify it further, baiting genuine individuals to join the fray. The idea is to create a massive food fight (the term is apropos, given that many of the online tussles, as we will see, are actually about individual food preferences) and thereby generate polarization and conflict. — climate scientist Michael E Mann, The New Climate War

The big changes we need will come when social media platforms stop profiting from disinformation (and cut their ties with fossil fuel companies). Meanwhile, we can help prevent mis- and disinformation from spreading.

Stop the spread

No, not that spread but the following strategies apply to stopping the spread of Covid disinformation on social media as well.

Understand your biases. Your platform of choice observes what you interact with most and serves up more of the same. Recognize that you’ll more likely share posts that align with your beliefs.

“Be aware before you S.H.A.R.E.” Early on in the pandemic, the WHO created resources for fighting the “Covid infodemic.” It recommends people follow the checklist pictured below before sharing posts.

Share less, create more. Post your own original content only and you won’t have to worry about spreading disinformation (assuming you don’t write disinformation, which I’m sure you won’t!).

Check the date on the story. Some posts will feature images from past events and claim they are happening now in order cause some sort of strong reaction—shock, anger, alarm and so on—and a share.

Recognize satire! Even if you don’t recognize The Borowitz Report as satire, the banner says right there, “Not the News.”

Get a dose of “prebunking.” Prevention works best. Even after people learn a piece of information that they had believed to be true is actually false, the damage has been done and they still tend to believe it. Prebunking inoculates people against disinformation before the fact by exposing them to a small amount of disinformation and then explaining why the information is false. You can get your prebunk inoculation by playing the short games Bad News, Cranky Uncle, or Go Viral!. Kids can learn how to spot disinformation with the Bad News Junior version.

In all three games, the player takes on the persona of a bad actor on social media and learns how to manipulate others using emotionally charge words, fake experts and conspiracy theories, for example, in order to spread disinformation. After playing, you can more easily spot these tactics online. Playing just once inoculates people against disinformation for a couple of months, after which, another small dose offers further protection.

Report the misinformation. This WHO page lists 10 online platforms, with each one linking to instructions on how to report misinformation on it. The platforms are Facebook, YouTube, Twitter, Instagram, WhatsApp, TikTok, Linkedin, Viber, VK and Kwai.

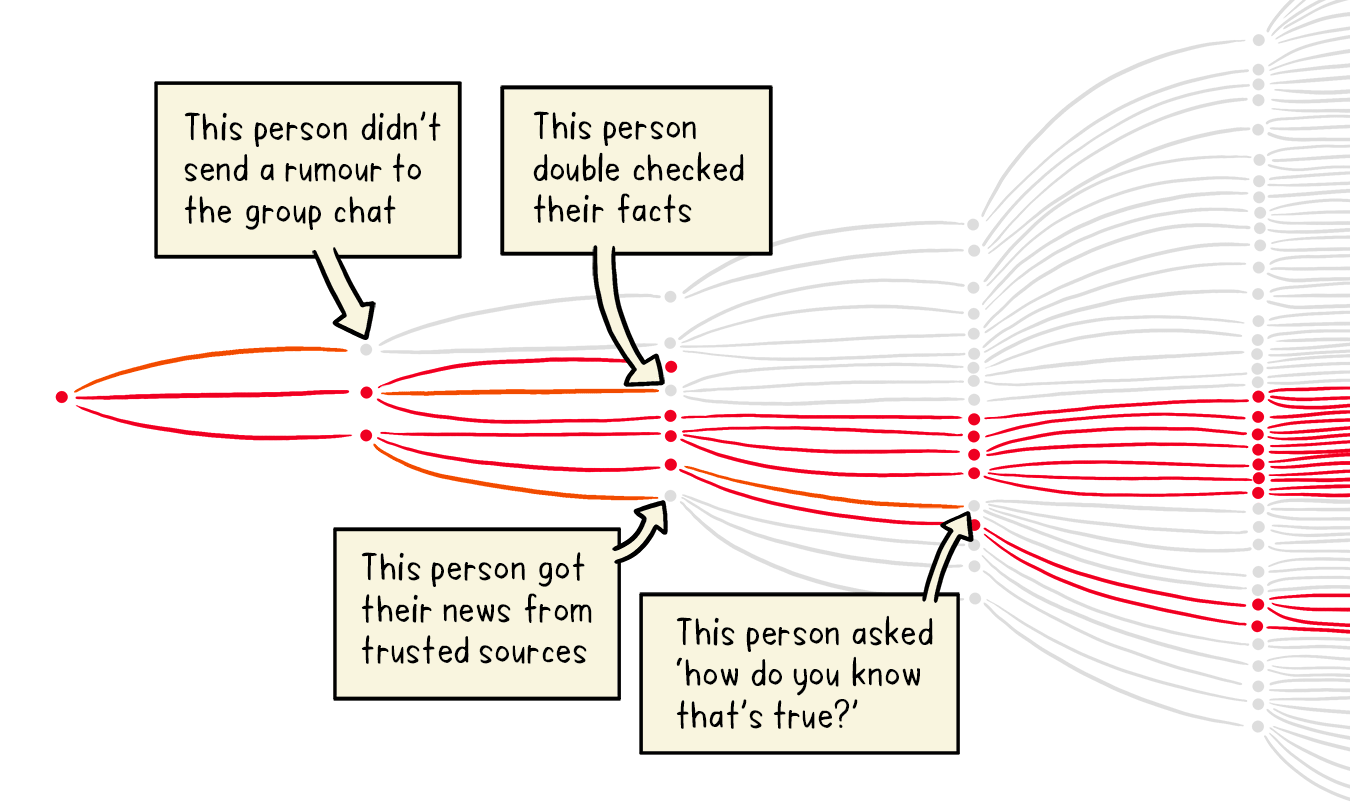

Correct misinformation when you come across it. When you retweet, you amplify. Rather than retweeting a post and adding the caption “this is fake,” take a screenshot of it and share that with the caption “this is fake.” Also, try to be diplomatic when correcting people on social media. Otherwise, they’ll likely double down. You can simply ask, “How do you know that’s true?” as in the graphic at the top of this newsletter. And if someone asks that question before you do, ask anyway. The more people question the post, the less likely others will share it.

Finally, don’t feel deficient if you’ve fallen for misinformation!

No one is completely immune. Indeed, there is now evidence that smarter people may sometimes be even more vulnerable to certain ideas, since their greater brainpower simply allows them to rationalise their (incorrect) beliefs. — The Guardian, “Why smart people are more likely to believe fake news”

If you haven’t seen it, The Social Dilemma explores “the dangerous human impact of social networking, with tech experts sounding the alarm on their own creations.” I have another book recommendation also. How to Do Nothing: Resisting the Attention Economy, by Jenny Odell, inspires readers to slow down, look up and take in the world around them.

First, I have appreciated every single newsletter. Although the rhythm of 1/day is quite intense so I have kept them all and will go back to some that I have not yet digested :).

I am sincerely worried about what disinformation is doing to the World. Every single platform is plagued with it. Even a platform like Nextdoor that was originally intended to truly connect neighbors…

I wanted to add to this discussion, this episode of Hidden Brain on npr that discusses how to disprove misinformation. https://www.npr.org/2017/03/13/519661419/when-it-comes-to-politics-and-fake-news-facts-arent-enough

Sadly, it seems to suggest that it is hard work and nearly impossible once the misinformation is engraved in somebody’s brain.

And this new generation of teenagers who rely heavily on social platforms for their information is the one that needs daily drills on how to recognize facts. It is a daily discussion at our house with our teenager. His entire school saw the Social Dilemma with us, parents.

The major issue that I also see about the propagation of false information is that we all seem to remain in our echo chambers: look, I am pretty sure that the great majority of subscribers to these newsletters are already convinced. And yes, it gives me more talking points, and yes I keep sharing what I learn but it is hard to reach people out of our own echo chambers.

Thanks again Anne-Marie!

Last night the school district did a Zoom presentation for parents on Digital Awareness. I have been looking at Instagram, TikTok, as Discord as these seem to be what my teenagers are addicted to. One of them keeps saying something is right (disputable) because she saw a video on TikTok saying so. We parents discussed that these types of sites do not develop pragmatic skills in language. A parent brought up the Social Dilemma which the presenters said was good. I am going to watch it with my kids tonight. I will try to get my kids to play the Bad News Junior game. with me. Thanks for the book suggestion.